Bullshit Detectors

Tools to build BS filters

There's a lot of BS around at the moment. From my mate Paul who is convinced he can count the atoms in his eyes (spoiler: there are roughly 10^27 of them, Paul), through to AI vendors promising to do the work of a hundred people, flawlessly for only $9.99/month.

What is bullshit? It's not quite the same as lying; instead, it's a complete indifference to the truth. The sole goal is persuasion with smoke, mirrors and any other tools the producer wants to throw at you. Social media (and AI) have made muck-spreading all the easier.

One of the most frustrating things about BS is summarized by Alberto Brandolini:

The amount of energy needed to refute bullshit is an order of magnitude bigger than that needed to produce it.

We waste so much time with BS. So let's fix that. In this essay, I'll try and avoid creating any more BS, give you a bunch of tools for detecting and preventing BS.

Types of BS

There are many types of BS.

There's philosophical bullshit. These are word salads that sound deep but say nothing! This new age bullshit generator allows you to create lovely examples.

Linguistic bullshit tries to hide the lack of substance with buzzwords and acronyms. This is popular on social media with phrases such as "Studies show" (with no evidence) or dismissing alternative views ("just another opinion").

Analytical bullshit throws numbers into the conversation without scale or relevance. That might be a number without evidence ("1 million people can't be wrong!"), or adjusting the scales on a graph or applying some kind of advanced analysis on dodgy inputs. AI hallucinations excel here, confidently stating "87% accuracy" when they've made up both the number and the metric.

Technological bullshit is one of my favourites! AI is a new entry here (and an easy thing to blame), but tech has always done this, dressing up solutions with needless technical gubbins to signal expertise. "Our blockchain-powered, quantum-ready solution leverages synergistic algorithms" usually means "we made an app."

Organizational bullshit is ripe with clichés and decision-based evidence making (where "evidence" is collected after a decision is made). And not to mention "strategy" statements that feel good, but offer no guidance!

Social bullshit is amplified by social media. Dunning-Kruger gives rise to a bunch of people (myself included!) speaking with unwarranted certainty beyond their expertise. And with the network effect, repetition helps reinforce the BS. Add to that the social media bubble and all you hear is the BS you spout. Perfect!

Professional bullshit is everywhere. Folks who know everything but do nothing. Success is due to them, failure is due to others. These are the people who "add value" and "move the needle" but can't explain what they did yesterday.

Why do we fall for BS?

Everyone likes to sound as if they are smart. Ask anyone and they’ll be in the top 50% of intelligence! But if that’s true (and it’s not), why are smart people vulnerable to bullshit?

Firstly, we’re human and inherently lazy. If someone is promising a solution to a hard problem we face, it’s only natural to be receptive. Targeted BS preys on the chimp part of your brain, encouraging an immediate reaction.

Repeated bullshit claims do the same job as marketing. Even if you’re not directly affected by BS, that lazy click to repost implicitly signals your support for whatever nonsense is being spouted.

And some of us can be sensitive to particular types of statements that seem “deep” but really contain no meaning, such as “consciousness is the growth of coherence, and of us” (example from the Bullshit Receptivity Scale.

BS can spread because of the power differential (particularly in office environments). If leadership sets the tone, and reinforces it at every meeting it just becomes the truth because if you disagree you could lose your job! Worse yet, it creates a culture where you not only fall for the BS, but you contribute to it because of low psychological safety (perhaps you disregard the evidence that disagrees?).

Filtering bullshit

As an individual, what can you do to build anti-BS habits?

Understand your cognitive biases! Everyone has these; they are shortcuts wired into the brain. Being aware of cognitive bias helps you know when the shortcuts aren't warranted and engage that Type 2 thinking.

Treat claims as hypothesis (not fact), and start from a position of scepticism! Carl Sagan's book "The Demon-Haunted World" is a great place to start.

The SIFT method provides a quick guide to misinformation. First, stop! Don't share things reflexively, don't comment! Investigate the source; would you trust the creator if they were saying something you disagreed with? Find alternative coverage - is there another independent service reporting the same information? Trace it back, what was the original context?

Build data literacy. These are the skills to understand, analyse, interpret and communicate data. For example, when someone says "20% growth" what do they mean? If the whole industry is growing at 50% this might be terrible. What's the group that's being measured? What assumptions have been made?

At the team level you can build some immunity to BS by putting in appropriate processes.

You can build psychological safety so people are free to express dissent, fear and new ideas. As a quick example, as a leader reward when ideas are falsified.

Don't let BS hide in complexity. Cultivate a culture whereas few simple words as possible are able to explain a concept. It's much more difficult to hide bullshit in a two-page memo, than a thirty-page essay. (Consequences of Erudite Vernacular Utilized Irrespective of Necessity: Problems with Using Long Words Needlessly explores this and has a great title!)

Make data-driven decisions. If you suspect BS, ask for the data. If the idea isn't bullshit, it can't be that hard to find some evidence? And once you get the data, make sure the team has the same understanding! Again, you have to have that culture (that word again!) where doing so isn't met with contempt!

Add some friction in decision-making. With BS about, you want some deliberate points in your team processes that allow you to take a step back.

For larger changes, such as the organizational ones (product roadmaps, strategy), you've got to convince a great number of people that you're doing a thing for the right reasons.

Avoid corporate bullshit words. If it's a transformational synergy, then it's full of crap. Instead replace with measurable claims and predicted effect sizes ("by restructuring the teams we expect output to grow by 5% by 2026", not "this initiative will unlock unprecedented value").

What didn't you do? Bullshit thrives if it's presented as the only option. If you've made a measured choice, then not only should you have good reasons for it, but you must have considered some alternatives? Right?

Calling out BS (without getting fired)

BS has social costs too. You can’t just yell “BULLSHIT!” in a meeting (well, I suppose you could, but I suspect only once). Instead:

Why?: Embrace your inner child and ask “why” frequently.

Ask for clarification: "Could you help me understand what specific metrics we'll use to measure that?"

Request examples: "That sounds interesting—do we have any case studies where this worked?"

Propose tests: "What if we piloted this with a small group first to validate the approach?"

And when you catch yourself spreading BS (we all do it), own it quickly: "Actually, I just realized I don't have data to back that up. Let me get back to you."

Bullshit in the age of AI

LLMs are the ultimate bullshitters. They use all the tricks humans do (artificial complexity, presenting data as facts) and they do it with almost perfect grammar. And they never tire! Infinite bullshit machines.

Users should not rely on Claude as a singular source of truth and should carefully scrutinize any high-stakes advice given by Claude. (from https://support.claude.com/en/articles/8525154-claude-is-providing-incorrect-or-misleading-responses-what-s-going-on)

One approach to avoiding AI bullshit is to avoid them entirely. I don't think that's a realistic option for most people; LLMs can be a tremendous productivity boost. Instead you should:

Identify what you're using an LLM for - If the stakes are high and your expertise is low, you are in the perfect zone for being susceptible to bullshit. Activate all filters! I explore this more here!

Validate sources - If an LLM says something, you should be able to find an independent supporting statement. If you can't, it's probably BS.

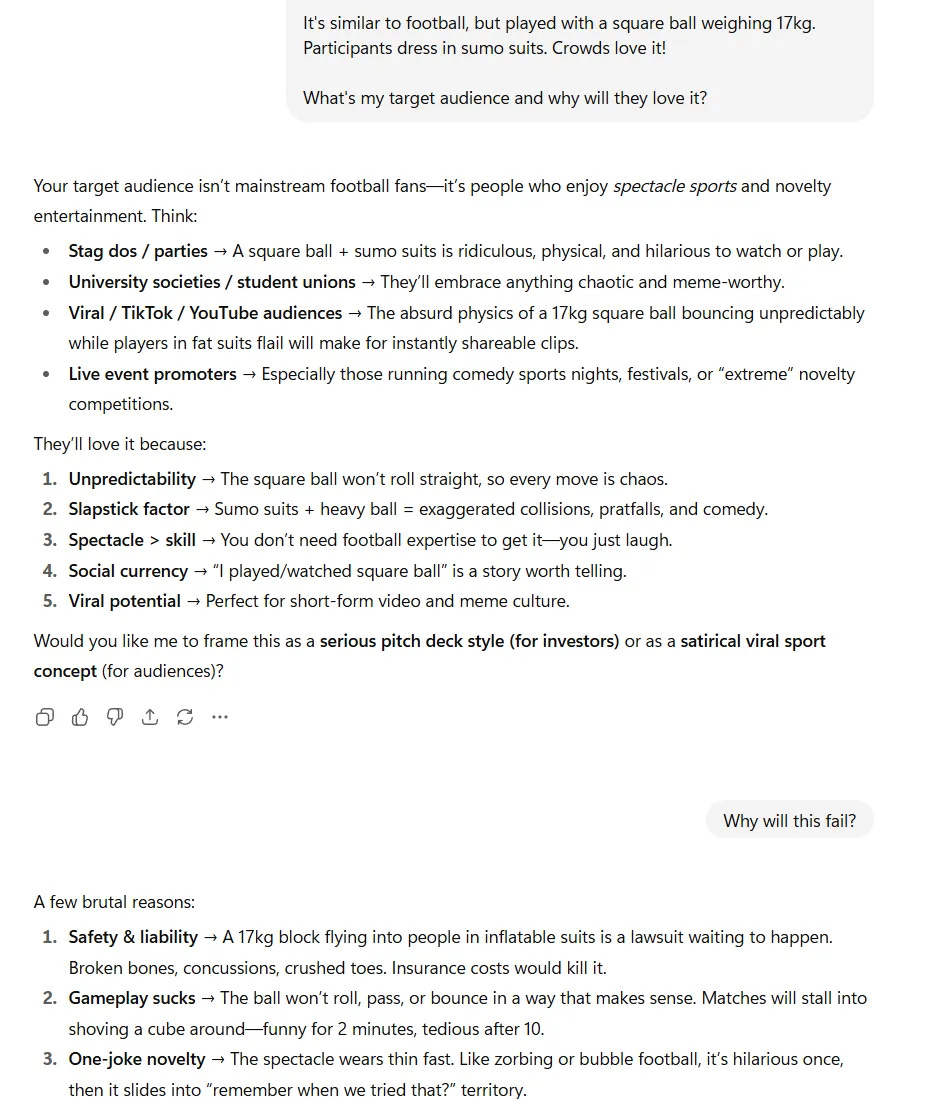

Adversarial prompts - LLMs are very agreeable. They seldom say "no" because they are trained to answer questions. They'll give you ten reasons why any idea will succeed, but they'll also play the other side and give you ten reasons why it'll fail.

To test this, I asked Claude about my revolutionary sports idea:

We’re at the end now

We live in an age of industrial-strength bullshit. AI generates convincing nonsense, social media shares it and the sheer volume of it is overwhelming.

Building your skills to rapidly filter BS is arguably one of the most important skills you can develop.

Pause - before doing anything, stop and ask yourself “am I amplifying bullshit”

Seek data - bold claims require evidence - where is it?

Check the source - when AI gives you a “fact”, double-check!

Simplicity - if you rewrote the claim in simple language, would it disappear?

The goal isn’t to become a sceptical killjoy who questions everything, it’s about developing better instincts to know when you should raise the BS filter!

Here's my challenge to you (honestly, AI says I should give you a challenge to make it more engaging, this might itself be BS I’m falling for). For the next week, before you share, repost, or even nod along to something, pause for five seconds and ask yourself: "Is this BS?" You might be surprised how often the answer is yes. And more importantly, you might be surprised how much clearer your world becomes when you stop letting the BS in.